Recent Blog Posts

On AI, Art, Writing, and the Distillation of Creativity

Can generative AI create art? Two years ago I took my first swing at answering that, at least from my perspective.1 As AI systems become more advanced, this question, and the issues surrounding it have become of greater import. With a new release from OpenAI, it’s become a topic of great passion, and one prime to revisit for me. I would like to explore this topic more deeply than I did previously, both in terms of cultural impact, and historical context.

Read more…When AI Becomes I

The challenge of defining life when intelligence goes non-biological.

One of the many joys of being human, is that we constantly face questions about our existence, from the seemingly simple (why is the sky blue), to the labyrinthine (what is the meaning of life, does pineapple go on pizza). Thanks to growing up watching Star Trek, one of these that has fascinated me is the question of artificial life. Thanks to a character named Data, a character that’s both relatable and entirely different, many have found themselves wondering if that’s what the future holds.

Read more…Millions of Jobs

or: On AI, Job Creation & Destruction, and The Race to Oblivion

It has been 20 years since I first used machine learning to solve a complex business problem. The underlying problem was simple: the company was selling a new service and wanted to know who was most likely to buy it. We had millions of records, and each record had hundreds of fields. A vast amount of data, but no idea how to extract insight from it. Countless hours from various data analysts had been invested into finding a pattern, but none was forthcoming.

Read more…Security Is a Shell Game

In the world of security, everything comes down to trust; sooner or later you have to trust something. Often, this something is a human. While we are busy building advanced cryptosystems that will survive the heat death of the universe, sooner or later, digging down layer by layer, you get down to a human and their limited memory. While we may build software, hardware, and other systems to protect this chain of trust, it almost always ends with a human.

Read more…Whose CVE Is It Anyway?

The latest vulnerability causing headaches across the world is CVE-2023-4863, issued by Google Chrome and described as “Heap buffer overflow in WebP in Google Chrome prior to 116.0.5845.187 allowed a remote attacker to perform an out of bounds memory write via a crafted HTML page”. This same CVE is cited by a number of other vendors as they are impacted as well. But, is this really a Google Chrome vulnerability?

Read more…

Recent Security Research

Exploiting the Jackson RCE: CVE-2017-7525

Earlier this year, a vulnerability was discovered in the Jackson data-binding library, a library for Java that allows developers to easily serialize Java objects to JSON and vice versa, that allowed an attacker to exploit deserialization to achieve Remote Code Execution on the server. This vulnerability didn’t seem to get much attention, and even less documentation. Given that this is an easily exploited Remote Code Execution vulnerability with little documentation, I’m sharing my notes on it.

Read more…Breaking the NemucodAES Ransomware

The Nemucod ransomware has been around, in various incarnations, for some time. Recently a new variant started spreading via email claiming to be from UPS. This new version changed how files are encrypted, clearly in an attempt to fix its prior issue of being able to decrypt files without paying the ransom, and as this is a new version, no decryptor was available1. My friends at Savage Security contacted me to help save the data of one of their clients; I immediately began studying the cryptography related portions of the software, while the Savage Security team was busy looking at other portions.

Read more…PL/SQL Developer: HTTP to Command Execution

While looking into PL/SQL Developer – a very popular tool for working with Oracle databases, to see how it encrypts passwords I noticed something interesting. When testing Windows applications, I make it a habit to have Fiddler running, to see if there is any interesting traffic – and in this case, there certainly was. PL/SQL Developer has an update mechanism which retrieves a file containing information about available updates to PL/SQL Developer and other components; this file is retrieved via HTTP, meaning that an attacker in a privileged network position could modify this file.

Read more…

Insane Ideas

The Insane Ideas series is a group of blog posts the detail various ideas that I found interesting, but didn't pursue due to time restrictions or other factors. The goal of publishing these ideas is to make the concept available to others, in hopes that they will pursue the idea - or at least find amusement in it.

Insane Ideas: NFT the Stars

This is part of the Insane Ideas series. A group of blog posts that detail ideas, possible projects, or concepts that may be of interest. These are ideas that I don’t plan to pursue, and are thus available to any and all that would like to do something with them. I hope you find some inspiration – or at least some amusement in this. NFTs are drawing in vast amounts of money; the cryptocurrency community couldn’t be more excited unless Elon sold himself as an NFT.

Read more…Insane Ideas: Stock in People

This is part of the Insane Ideas series. A group of blog posts that detail ideas, possible projects, or concepts that may be of interest. These are ideas that I don’t plan to pursue, and are thus available to any and all that would like to do something with them. I hope you find some inspiration – or at least some amusement in this. There are many ways to invest in a variety of things, though there is one hugely promising front that has barely begun to emerge, that could have massive potential for profit, and incredible ramifications: the ability to invest in individuals.

Read more…Insane Ideas: Blockchain-Based Automated Investment System

This is part of the Insane Ideas series. A group of blog posts that detail ideas, possible projects, or concepts that may be of interest. These are ideas that I don’t plan to pursue, and are thus available to any and all that would like to do something with them. I hope you find some inspiration – or at least some amusement in this. A few months ago I was reading about high-frequency trading (HFT) – algorithms that allow investors to make money essentially out of nothing by executing trades at high speed, and leveraging the natural (and artificial) volatility of the market.

Read more…

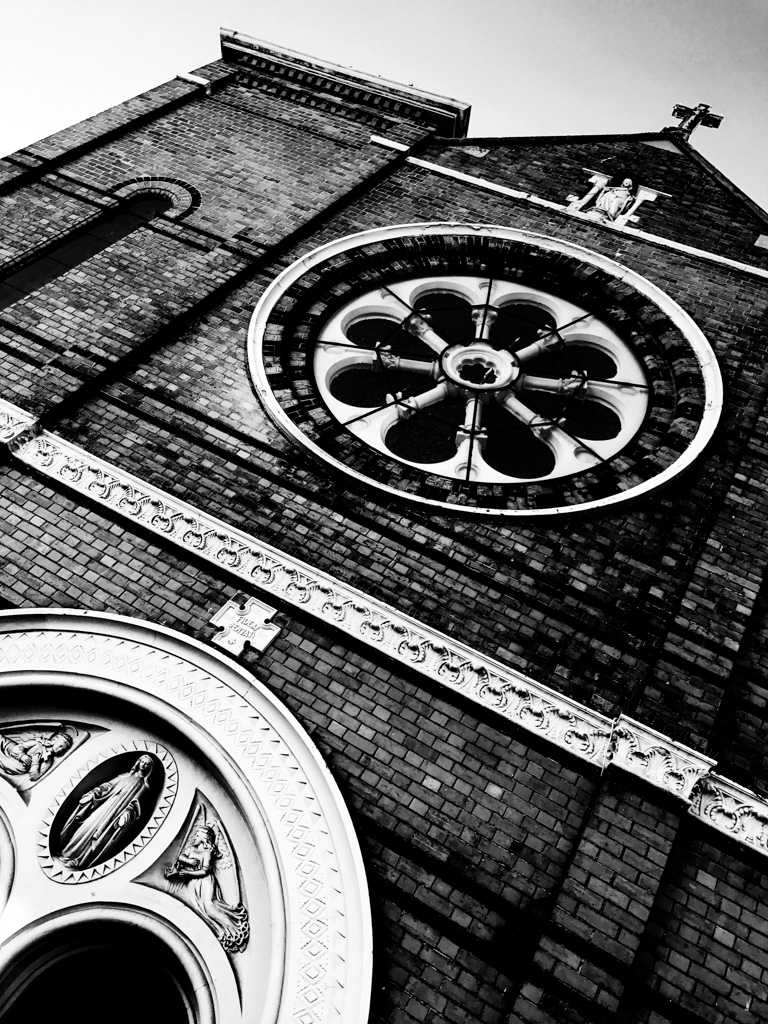

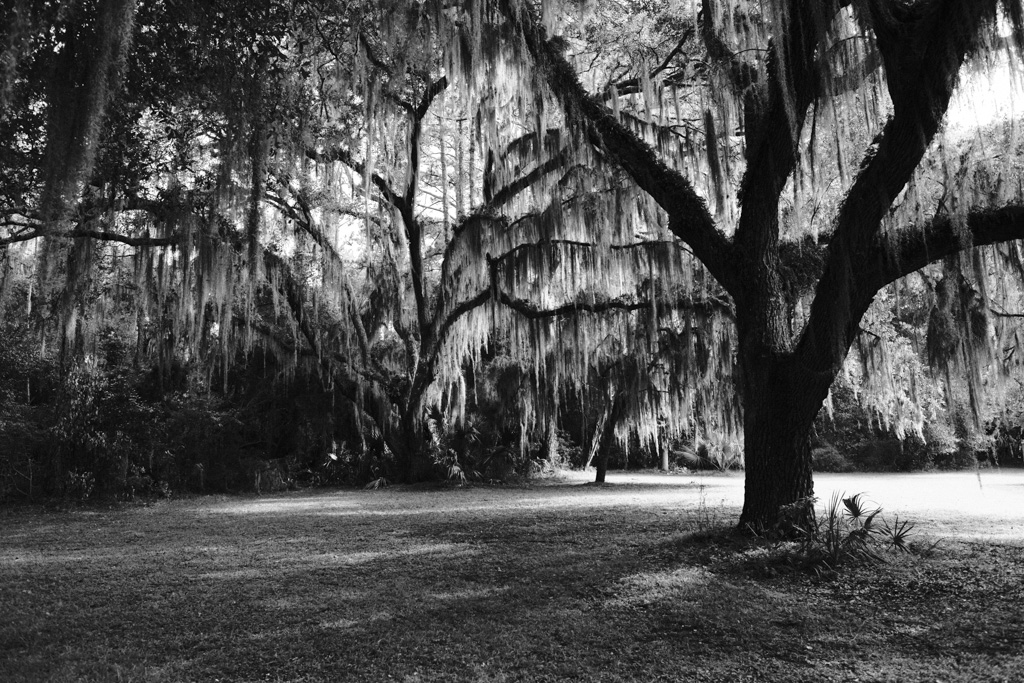

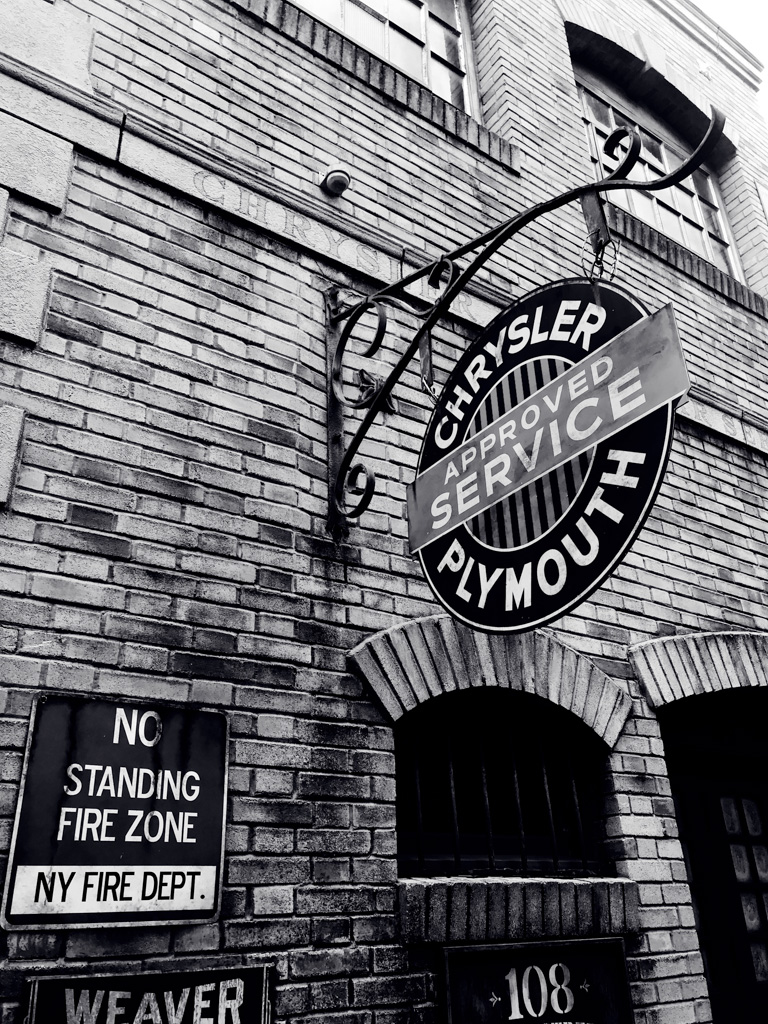

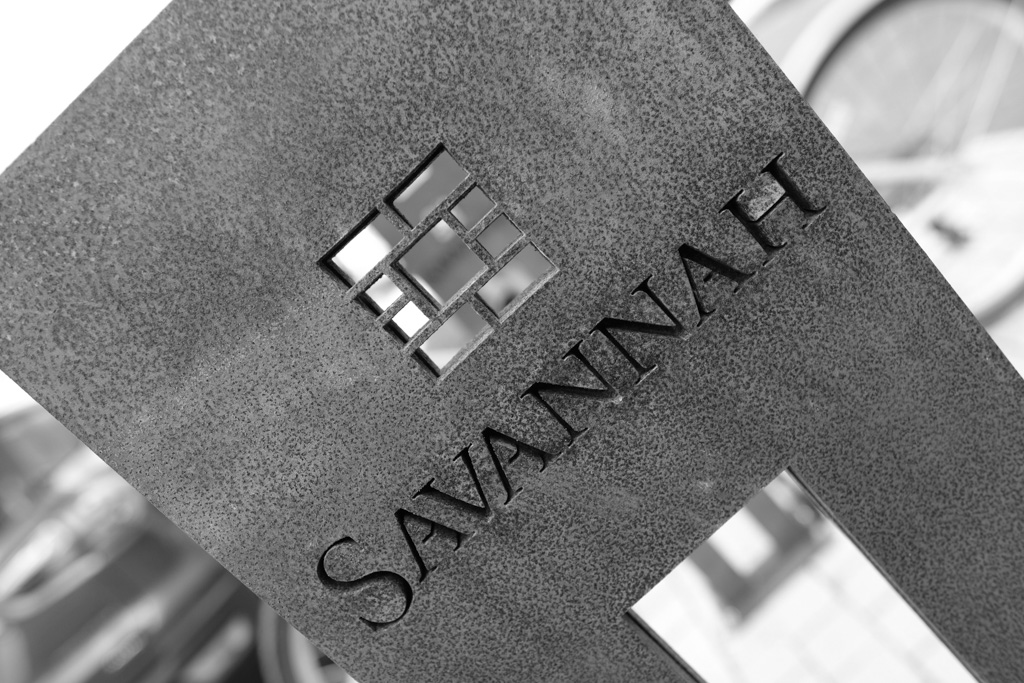

Fine Art Photography

About my Photography. | Buy Limited Edition Prints | My Portfolio | My Photo Blog

Projects

- YAWAST - The YAWAST Antecedent Web Application Security Toolkit.

- libsodium-net - The .NET library for libsodium; a modern and easy-to-use crypto library.

- ccsrch - Cross-platform credit card (PAN) search tool for security assessments.

- Underhanded Crypto Contest - A competition to write or modify crypto code that appears to be secure, but actually does something evil.

About Adam Caudill

Adam Caudill is a security leader with over 20 years of experience in security and software development; with a focus on application security, secure communications, and cryptography. Active blogger, open source contributor, writer, photographer, and advocate for user privacy and protection. His work has been cited by many media outlets and publications around the world, from CNN to Wired and countless others.